One breach is all it takes: why law firms can’t afford cyber complacency

Once perceived as a back-office function, cyber security is now recognised as essential for client trust and firm reputation.

Lawyers rely on reputation, discretion, and data integrity.

Yet many remain underprepared for advanced cyber threats and the vulnerabilities introduced by AI.

A single breach can damage not just systems, but hard-won reputations.

We surveyed 700+ lawyers and legal professionals to find out how teams are protecting their brands and businesses from more advanced cyber attacks.

The emerging threat landscape

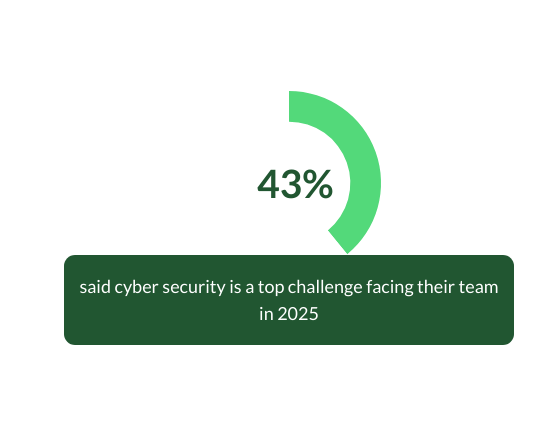

Of the list of challenges legal professionals face each year, cyber security is consistently at or near the top.

LexisNexis' latest July 2025 survey of UK lawyers found cyber security was, once again, in the top five. Almost half (43%) listed cyber security as one of the three most pressing challenges facing their team in the next 12 months.

This isn't a struggle unique to the legal sector. The UK government's 2025 Cyber Security Breaches Survey found 70–74% of medium and large organisations faced a cyber security breach, with phishing being responsible for over 85% of incidents.

Once viewed as an IT issue, cyber security now underpins every aspect of client service, compliance and business continuity. As organisations introduce AI-powered tools to their existing processes or workflows, concerns have only heightened.

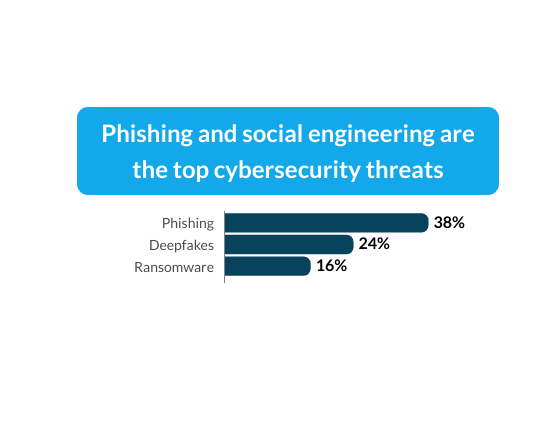

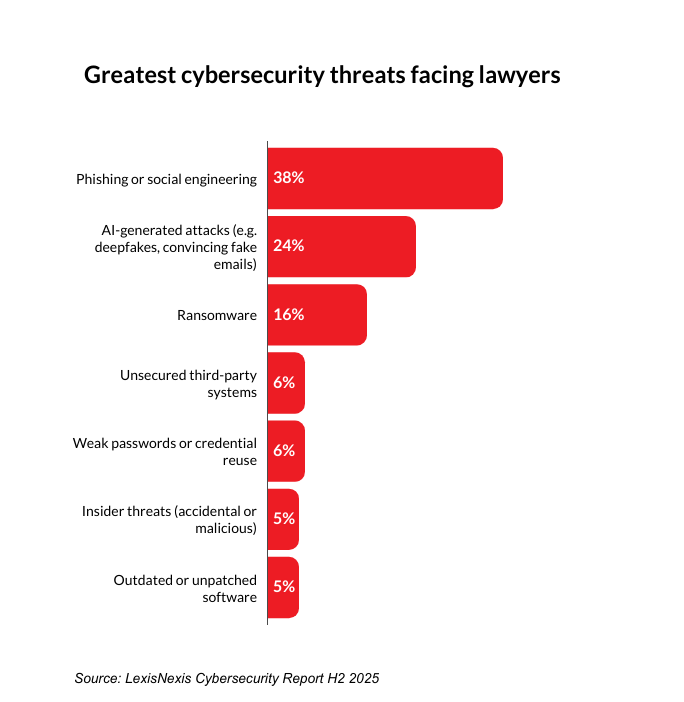

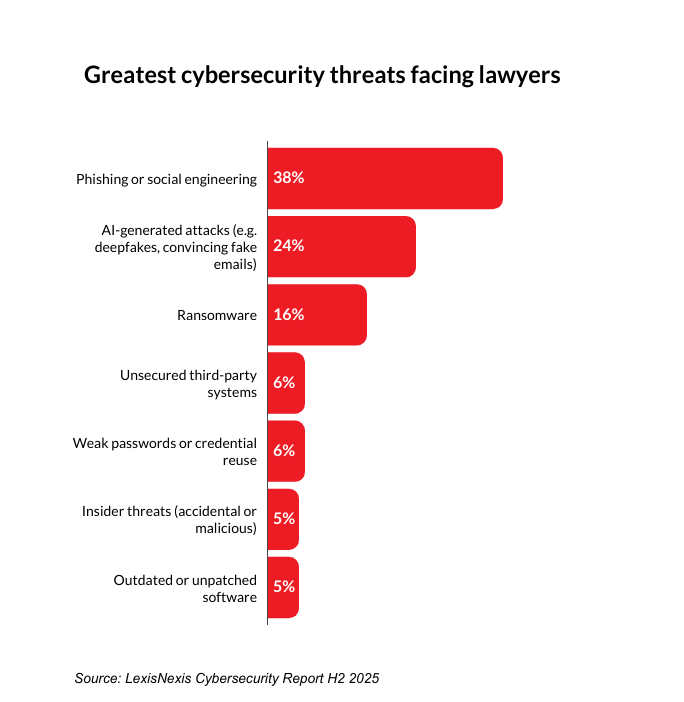

Phishing and social engineering scams top the list of cyber security threats, according to our survey, at 38%. This rose to 44% when looking at leaders from small law firms.

Martyn Styles, Chief Information Security Officer at Bird & Bird, says phishing has been a major problem for law firms for many years now.

"We continue to invest in advanced detection technology to help combat this threat."

A recent example given by Styles is the mass sending of templated fake invoices to organisations that appear to originate from a well-known law firm.

A leader of a small law firm told us:

"I am fearful that, as phishing scams become more sophisticated, and as AI use by cyber criminals becomes more prevalent, the likelihood of 'attacks' succeeding will increase."

The second biggest cyber security threat to the legal profession is AI-generated attacks, such as deepfakes or convincing fake emails (24%).

Read our cybersecurity practice notes

One partner at a different practice stated their fears bluntly:

"Our cybersecurity practices are not matching AI growth. Unless we catch-up, we shall decay and die."

Dawn Faulkner, Director of IS at Shoosmiths, says these attacks are becoming more prevalent although still developing in sophistication.

"While technical controls are in place to reduce the risk of AI-driven attacks, deepfake detection capabilities are still maturing and are not yet as embedded or widespread as anti-phishing technologies."

The most effective layer of defence is the human firewall, says Faulkner.

"Firms that have not yet implemented regular, scenario-based phishing simulations or deepfake awareness training are significantly more exposed to these evolving threats."

While AI technology is aiding legal teams to deliver work faster, it is also contributing to a more complex threat landscape.

Weak passwords and insider threats were seen as less worrisome to lawyers, at only 6% and 5% respectively.

Get the latest data protection and cybersecurity practice notes

AI threats are headline-grabbing, says Faulkner, but the fundamentals like enforcing strong password policies, using password managers, and monitoring for unusual internal activity, are often overlooked or underfunded.

However, many are taking additional precautions to protect their businesses and their clients.

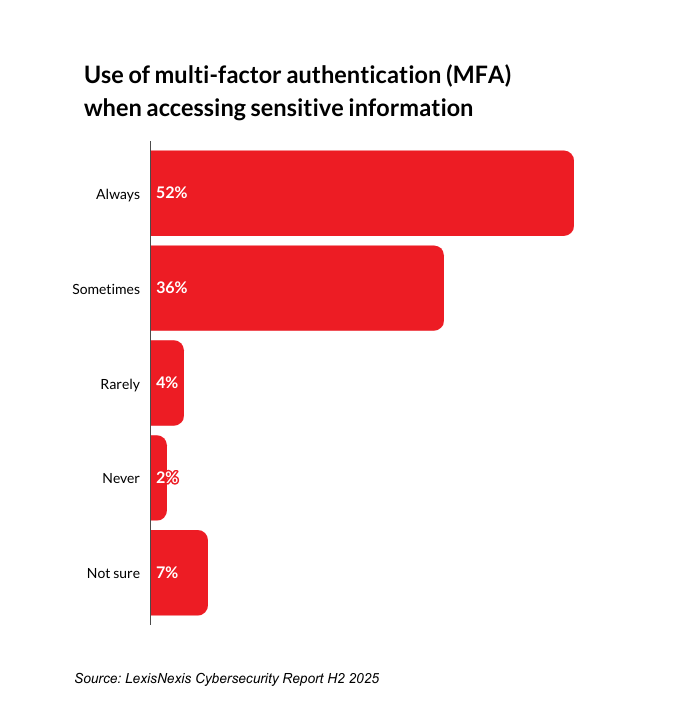

Half (52%) of lawyers said they always use multi-factor authentication (MFA) when entering sensitive systems or data, while 36% said they sometimes use it depending on the platform. This was particularly true for in-house leaders, with 59% always using MFA and 39% sometimes using MFA.

One in-house legal leader at a major corporate said:

"Core protections like multi-factor authentication, encryption, and regular vulnerability scans are solid, but AI introduces new vulnerabilities like prompt disclosure, model manipulation, and data-privacy gaps in cloud environments."

Strengthening vendor due diligence, conducting AI-specific threat assessments, and running incident-response drills are essential, they said.

"Continuous monitoring and adaptive threat intelligence will be critical to sustaining confidence in our cybersecurity posture."

Styles says users may choose a path with lower security simply because they are trying to work efficiently, rather than as a deliberate attempt to egress data.

"IT professionals are often our own worst enemy, making legitimate sharing and collaboration far too difficult. We unwittingly lead them to short-circuit adequate security protections, which will keep happening as long as they get found out."

Although some smaller firms still question the robustness of their cyber security measures, it is encouraging that awareness of the risks is strong, given how high the stakes are for client trust and reputation.

Risk of reputation loss

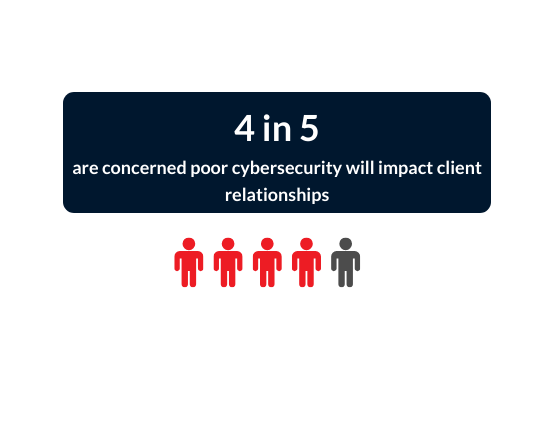

For legal professionals, reputations are everything. Relationships built over decades can be undone in moments if a data breach compromises client trust.

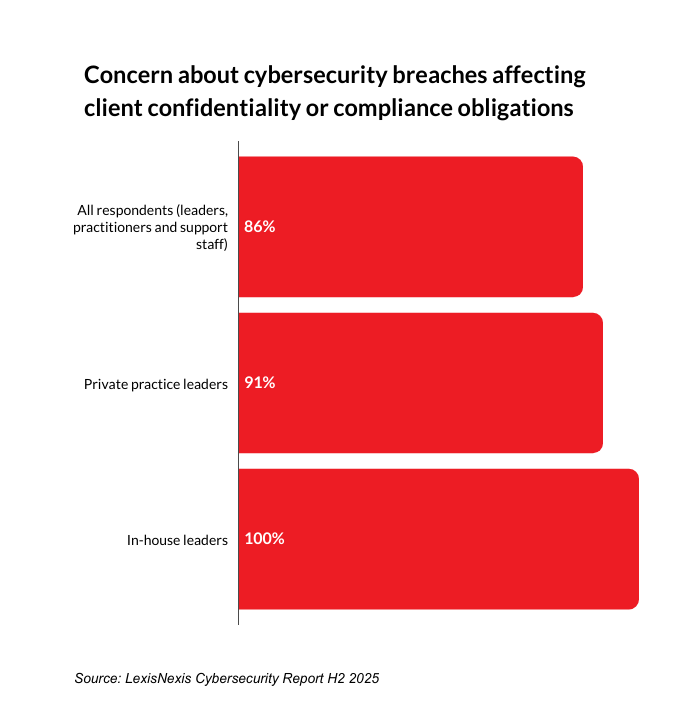

Four in five lawyers worry about cyber breaches compromising their client's confidentiality.

Unsurprisingly, 86% of lawyers said they are concerned about cyber security impacting client confidentiality or compliance obligations. This rose to 91% for law firm leaders, and 100% for General Counsel.

An IT manager of a small law firm said:

We are dynamically and strategically adapting to the evolving AI landscape to safeguard the accessibility, confidentiality, and integrity of our client services. This includes strengthening our cybersecurity posture and ensuring our systems remain fit for purpose."

For firms handling sensitive client matters, the risk isn’t just theoretical: a breach can lead to legal action, professional misconduct investigations, and loss of key accounts. In an environment where trust is currency, cybersecurity is no longer just an IT issue; it’s a business-critical imperative.

Cyber security in the AI era

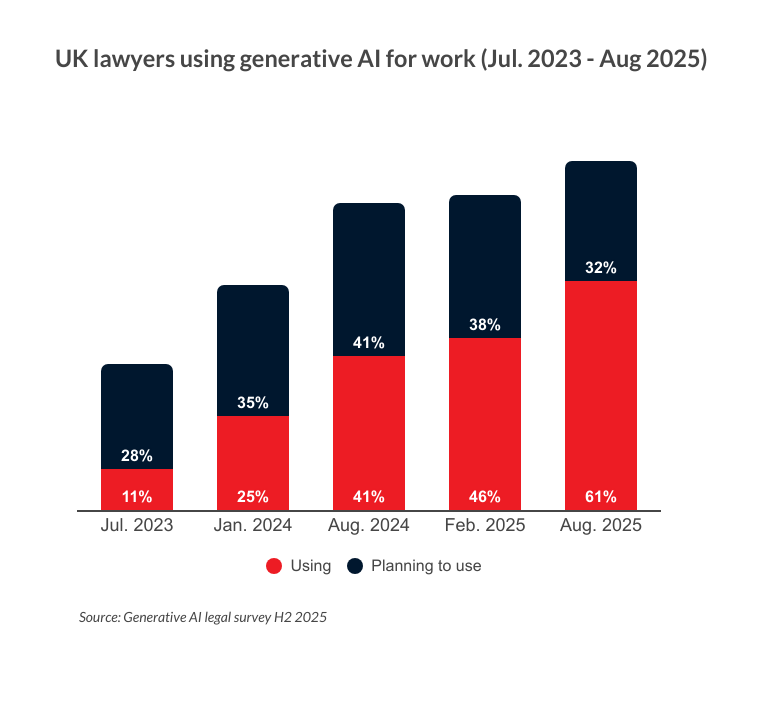

The use of AI by legal professionals is growing at pace. Two-thirds (61%) of lawyers are now using AI for work purposes, while a third (32%) are planning to use it in the near future.

For legal, this rapid adoption signals a shift towards greater efficiency, automation, and data-driven insight. But teams must also move quickly to ensure AI tools are implemented responsibly, securely, and in line with regulatory expectations.

New security gaps will emerge, says Styles from Bird & Bird.

"The speed and breadth of data sources that can be searched will highlight weak permissions models.

We have to carefully consider how an attacker might utilise AI to access sensitive data, he says.

"Testing AI tools is becoming a major part of the information security team’s due diligence requirements."

More than three-quarters (77%) of lawyers still harbour concerns over relying on inaccurate information.

A partner of a small law firm told us general AI tools are "raising concerns due to the accuracy of the data being in doubt."

Recent scandals of lawyers citing cases in court that AI has just made up are particularly worrying, the partner said.

Unsurprisingly, practitioners are more comfortable with AI when it’s built on trusted sources. Four-fifths (88%) of those using AI said they prefer tools grounded in authoritative legal content.

Leaking confidential data was the second most prevalent concern at 47%.

“We ensure that the data principles and risk assessment tools we have in place are robust, to help us effectively manage risk,” said Deborah Finkler, now the former Managing Partner of Slaughter and May.

Laura Hodgson, the AI Lead at Linklaters, says:

“One of the ways we seek to reduce risk is through efforts on adoption such as training, communication and using vocal champions to explain the benefits and address any concerns.”

While the benefits are aplenty, many legal experts are still grappling with how to use generative AI safely.

- Only 24% said their organisation had provided them with appropriate training to use AI safely

- Only 28% have an AI policy that is easy to understand and follow. This went up to 45% in large law and 37% for in-house.

Faulkner from Shoosmiths says firms must ensure robust data governance is in place to prevent non-compliant handling of sensitive client data.

"Firms must conduct thorough vendor risk assessments aligned with standards like ISO 27001 and GDPR."

Get data protection guidance for specific use cases

To mitigate risks such as model drift or bias, AI outputs should be regularly reviewed, she says.

"Embedding security by design, involving InfoSec early, and running Data Protection Impact Assessments and threat models are essential steps."

Building trust through secure partnerships

Reliable partnerships protect client data and safeguard reputations.

When asked about the top cyber threats legal professionals face, only 6% explicitly expressed concern about unsecured third-party systems. Yet the risks linked to external partners and tools should not be underestimated. As AI adoption grows, so does the reliance on third-party platforms and providers, making the security and integrity of these partners critical.

Information security supplier questionnaire

Technology partners that you trust, and whose capabilities will grow with your business, is crucial. Choosing partners who put security and compliance first is essential to protecting your reputation and client data. With evolving threats targeting legal professionals, partnering with a provider that combines deep domain knowledge with rigorous cyber security safeguards is no longer optional. It’s a necessity.

Lexis+ operates on a robust, independently audited infrastructure, designed to meet the highest security standards.

Without trusted partners who prioritise security from the ground up, legal teams risk exposing sensitive data and compromising their reputations.

Securing trust

Cyber security threats are evolving, reputational stakes remain high, and confidence in AI safety is far from universal.

While most practitioners see the benefits of faster processes and enhanced client service, concerns around data security, bias, and hallucinations cannot be ignored.

Protecting client trust starts with rigorous security protocols, continuous staff training, and clear AI usage policies.

Most importantly, it requires choosing technology partners who prioritise compliance, resilience, and transparency at every level.

In an age where one breach can undo years of credibility, proactive investment in secure AI solutions is a business imperative.